I’ve recently been looking at optimising a data load that I’m working on, and have got a quite a nice example of how the performance can be improved significantly by creating a CLR object.

To do this, I created a set of data (which is downloadable from here, is approx. 3mb and is extractable using 7-Zip ) which has approx. 200k records in it. The file has two columns (pipe delimited), which are a row id, and a set of events in a string (in the format “1,2,3,4,5”). Each string has a variable number of events in it (between 1 and 75), which looks something like this.

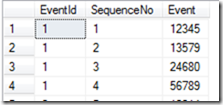

What I’m wanting to do is split this out, to have an Event Id, Sequence_Number and Event with the EventList above being split over multiple records, and a Sequence number showing the position of the event code in the list. Sounds straightforward.

I’m effectively looking for a quick way to generate this data.

T-SQL – Custom_Split

A custom script to split the table was created, looking like this:

create function [dbo].[Split]

(

@string nvarchar(4000),

@delimiter nvarchar(10)

)

returns @table table

(

[Value] nvarchar(4000)

)

begin

declare @nextString nvarchar(4000)

declare @pos int, @nextPos int

set @nextString = ''

set @string = @string + @delimiter

set @pos = charindex(@delimiter, @string)

set @nextPos = 1

while (@pos <> 0)

begin

set @nextString = substring(@string, 1, @pos - 1)

insert into @table

(

[Value]

)

values

(

@nextString

)

set @string = substring(@string, @pos + len(@delimiter), len(@string))

set @nextPos = @pos

set @pos = charindex(@delimiter, @string)

end

return

end

-- This code was taken from here: http://stackoverflow.com/questions/314824/t-sql-opposite-to-string-concatenation-how-to-split-string-into-multiple-recor/314833#314833 |

This was executed in Management Studio, with this script:

SELECT EventId,

IDENTITY (int, 1, 1) as SequenceNo,

Value as Event

INTO dbo.SQL_Events

FROM dbo.LargeFile

CROSS APPLY dbo.split(dbo.LargeFile.EventList, ‘,’) AS t

ORDER BY dbo.LargeFile.EventId |

Running this generated a table with 9.8 million rows and took 6 minutes 8 seconds, so handling approx. 26.6k records a second.

CLR – SplitEvents

To split the EventList in a CLR object, I did the following in Visual Studio 2010.

1. Choose the .NET framework, CLR Project

2. Right click the project when it’s created, and choose ‘Add –> Stored Procedure’.

In the body of the class, I used the following code:

using System;

using System.Data;

using System.Data.SqlClient;

using Microsoft.SqlServer.Server;public partial class StoredProcedures

{

[Microsoft.SqlServer.Server.SqlProcedure]

public static void CLR_Split()

{

// Put your code here

char[] delimiters = new char[] { ‘,’, ‘-‘ };

using (SqlConnection conn = new SqlConnection(“Context Connection=true”))

{

using (SqlCommand cmd = new SqlCommand(“SELECT EventId, EventList FROM LargeFile”, conn))

{

SqlPipe pipe = SqlContext.Pipe;

SqlMetaData[] cols = new SqlMetaData[3];

cols[0] = new SqlMetaData(“EventId”, SqlDbType.Int);

cols[1] = new SqlMetaData(“Sequence_No”, SqlDbType.Int);

cols[2] = new SqlMetaData(“Event”, SqlDbType.Int);

conn.Open();

using (SqlDataReader reader = cmd.ExecuteReader())

{

int iRow = 0;

if (reader.HasRows)

{

SqlDataRecord rec = new SqlDataRecord(cols);

pipe.SendResultsStart(rec);

while (reader.Read())

{

int iCTIQueueId = Convert.ToInt32(reader[0]);

string sIncoming = reader[1].ToString();

if (sIncoming.Length > 0)

{

string[] sSplit = sIncoming.Split(delimiters);

int iSplitCount = 1;

foreach (string s in sSplit)

{

try

{

int iSplitVal = Convert.ToInt32(s);

rec.SetSqlInt32(0, iCTIQueueId);

rec.SetSqlInt32(1, iSplitCount);

rec.SetSqlInt32(2, iSplitVal);

pipe.SendResultsRow(rec);

}

catch (Exception)

{

}

iSplitCount++;

}

}

iRow++;

}

pipe.SendResultsEnd();

}

else

pipe.Send(“”);

}

conn.Close();

}

}

}

}; |

Having compiled and deployed this to SQL Server, it could be run using this :

INSERT INTO CLR_Events (EventId, Sequence_No, Event)

EXEC CLR_Split |

This process created the 9.8 million rows in approx. 2 minutes 59 seconds, so handling approx. 54.7k records a second

Summary

So, having run these two processes, you can see that the CLR process is much faster.

| Process |

Time Taken |

Rec / Sec |

| T-SQL Stored Procedure |

6 minutes 8 sec |

26.6k |

| CLR Procedure |

2 minutes 59 sec |

54.7k |

Notes

When running these tests, I was running SQL Server 2008 R2, on a i5-430m laptop, with 4Gb RAM.

The database had plenty of space pre-allocated to it, so there was no performance hit based on growing the database.