While working with one of our customers over the past couple of days, and troubleshooting the performance issues on their Data Warehouse I found an interesting set of issue with Page Corruption.

Following on from the outstanding SQL Skills course I attended a few weeks ago (and as part of my ongoing MCM preparation), I thought it’d be an interesting exercise to run through my findings.

Step 1 – Why is everything so Slow?

Initially, I wanted to find out why the performance was so bad. I’ve been using Glenn Alan Barry’s Diagnostic scripts since I’ve found them to be really useful in the past. This one, to ‘Isolate top waits for Server instance’, gave me some really interesting figures. I ran this:

WITH Waits AS

(SELECT wait_type, wait_time_ms / 1000. AS wait_time_s,

100. * wait_time_ms / SUM(wait_time_ms) OVER() AS pct,

ROW_NUMBER() OVER(ORDER BY wait_time_ms DESC) AS rn

FROM sys.dm_os_wait_stats

WHERE wait_type NOT IN ('CLR_SEMAPHORE','LAZYWRITER_SLEEP','RESOURCE_QUEUE','SLEEP_TASK',

'SLEEP_SYSTEMTASK','SQLTRACE_BUFFER_FLUSH','WAITFOR', 'LOGMGR_QUEUE','CHECKPOINT_QUEUE',

'REQUEST_FOR_DEADLOCK_SEARCH','XE_TIMER_EVENT','BROKER_TO_FLUSH','BROKER_TASK_STOP','CLR_MANUAL_EVENT',

'CLR_AUTO_EVENT','DISPATCHER_QUEUE_SEMAPHORE', 'FT_IFTS_SCHEDULER_IDLE_WAIT',

'XE_DISPATCHER_WAIT', 'XE_DISPATCHER_JOIN', 'SQLTRACE_INCREMENTAL_FLUSH_SLEEP',

'ONDEMAND_TASK_QUEUE', 'BROKER_EVENTHANDLER', 'SLEEP_BPOOL_FLUSH'))

SELECT W1.wait_type,

CAST(W1.wait_time_s AS DECIMAL(12, 2)) AS wait_time_s,

CAST(W1.pct AS DECIMAL(12, 2)) AS pct,

CAST(SUM(W2.pct) AS DECIMAL(12, 2)) AS running_pct

FROM Waits AS W1

INNER JOIN Waits AS W2

ON W2.rn <= W1.rn

GROUP BY W1.rn, W1.wait_type, W1.wait_time_s, W1.pct

HAVING SUM(W2.pct) - W1.pct < 99 OPTION (RECOMPILE); -- percentage threshold

And this was the result:

Step 2 – What is BAD_PAGE_PROCESS ?

Having Google’d this, I found a Technet article saying that it was related to Suspect Pages.

BAD_PAGE_PROCESS Occurs when the background suspect page logger is trying to avoid running more than every five seconds. Excessive suspect pages cause the logger to run frequently.

Step 3 – What are the suspect pages ?

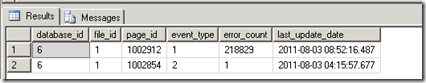

You can see what suspect pages you have (and ideally, there should be none), with this T-SQL:

select * from msdb.dbo.suspect_pages

Sadly, we had more than none.

Event type1 is an 823 error caused by an Operating system CRC error.

Event type 2 is a bad checksum.

Step 4 – Ok, Can DBCC CheckDB help ?

Next up, I used DBCC CheckDB to see what the issue was, and whether it could fix them. I used this command:

DBCC CHECKDB (<dbname>) WITH ALL_ERRORMSGS, NO_INFOMSGS

Having waited for quite a while for this to run, I got the following result.

Msg 8946, Level 16, State 12, Line 1 Table error: Allocation page (1:1002912) has invalid PFS_PAGE page header values. Type is 0. Check type, alloc unit ID and page ID on the page. Msg 8921, Level 16, State 1, Line 1 Check terminated. A failure was detected while collecting facts. Possibly tempdb out of space or a system table is inconsistent. Check previous errors. Msg 8909, Level 16, State 1, Line 1 Table error: Object ID 0, index ID -1, partition ID 0, alloc unit ID 0 (type Unknown), page ID (1:1002912) contains an incorrect page ID in its page header. The PageId in the page header = (0:0). Msg 8909, Level 16, State 1, Line 1 Table error: Object ID 0, index ID -1, partition ID 0, alloc unit ID 0 (type Unknown), page ID (1:1002912) contains an incorrect page ID in its page header. The PageId in the page header = (0:0). Msg 8998, Level 16, State 2, Line 1 Page errors on the GAM, SGAM, or PFS pages prevent allocation integrity checks in database ID 6 pages from (1:1002912) to (1:1010999). See other errors for cause. CHECKDB found 2 allocation errors and 1 consistency errors not associated with any single object. CHECKDB found 2 allocation errors and 1 consistency errors in database <dbname>.

That’s not so good, since, as Paul Randal says here:

The only repair for a PFS page is to reconstruct it – they can’t simply be deleted as they’re a fixed part of the fabric of the database. PFS pages cannot be rebuilt because there is no infallible way to determine which pages are allocated or not.

Ok, not a good thing, but the customer has a backup, so they are restoring that from tape at the moment. Once that’s accessible, I’ll restore the database, and reprocess the Data warehouse.

Step 5 – What are the errors on the page?

Since this is the first time I’ve seen Page corruption in the wild, and had a vague idea where to look, I thought I’d have a look at the corrupt pages.

To read the contents of a page, you can use DBCC PAGE.

First, here is the last successfully read page:

dbcc traceon(3604) go dbcc page(<dbname>,1,1002854,0)

This gave me this information (note the highlighted nextPage)

So lets look at the next page, using this :

dbcc traceon(3604)

go

dbcc page('DataWarehouse',1,1002855,0)

go

This started giving interesting results, since it is now pointing to page 0 as the next page.

Next, I looked at the page referenced in the DBCC error message above.

dbcc traceon(3604)

go

dbcc page('DataWarehouse',1,1002912,0)

go

Summary

In summary, we resolved the issues by restoring the database from a backup. It got the database up and running, and resolved the issue.

It was interesting looking through the issue, and seeing where the issue was caused.

WOW!! This has been a such a helpful article and so well written (very easy to understand for us non-tech kids).

Thanks Professor!

Hello colleagues, fastidious piece of writing and fastidious arguments commented here, I am truly enjoying by these.