So it’s TSQL Tuesday once more, and hosted by Jen McCown this month. Thanks Jen!

So it’s TSQL Tuesday once more, and hosted by Jen McCown this month. Thanks Jen!

The topic is around tips, tactics and strategies for managing an Enterprise…

Well, having been in my current role for nearly a year now, which involves a degree of managing a number of SQL Servers, it’s a great opportunity to share what helps me.

1 – SQL prompt

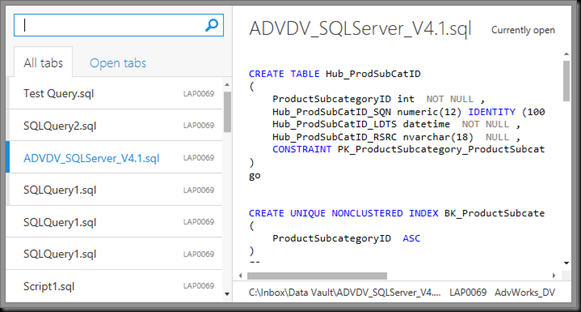

This is an Add-in for SSMS produced by Redgate. While it does a wide range of awesome things, one of the main things that help me, from an enterprise perspective, is the ability to colour code tabs. This means that it is really easy to see what environment I’m running code against. For me, Green is dev, Purple is staging (and not quite live), and Red is Production (or requires caution!).

The other, really nice feature is that when you close SSMS down (which occasionally happens accidentally, or when Windows Update does it’s thing, cos why would you deliberately close it!), SQL Prompt remembers all the tabs you had open, and reopens them (and connects them) on restart.

For me, this is great as it means that I can concentrate on the actual work, rather than waste time trying to think of a filename for some random query that may be of use at some point..

This is a really nice feature feature, that allows me to run the same query on multiple servers. One of the main uses of this is to get a ‘snapshot’ of what is running on the servers, with sp_whoisactive. Having a Query connected to one of these groups runs the query against each member server, and adds a column to the start of the result set showing the server name.

3 – Extended Events

I’ve had chance to get to grips more with this over the past few months, and I’m really starting to find lots of great uses for it. One of the really helpful (Enterprise-related) features that I’m using it for is to monitor execution times for a specific stored procedure. This is just for one SP, and it’s called thousands of times an hour. Using Extended events for this allows tracking of these times, with bordering on no overhead.

I’m in the process of doing a separate post on this, so keep em peeled.

Thanks again to Jen for hosting.

I’ve also started a separate blog (separation of anxieties, or something),

I’ve also started a separate blog (separation of anxieties, or something),

You must be logged in to post a comment.